Logistic Regression in Python – Preparing Data

Logistic Regression in Python – Preparing Data

In order to create a classifier, we must prepare the data in the format required by the classifier building block. We prepare the data by doing one hot encoding.

Encoding the Data

We will discuss what we mean by encoding the data shortly. First, let’s run the code. Run the following command in the code window.

In [10]: # creating one hot encoding of the categorical columns.

data = pd.get_dummies(df, columns =['job', 'marital', 'default', 'housing', 'loan', 'poutcome'])

As mentioned in the comments, the above statement will create a one hot encoding of the data. Let’s see what it creates? Check the data created named “data” by printing the header record in the database.

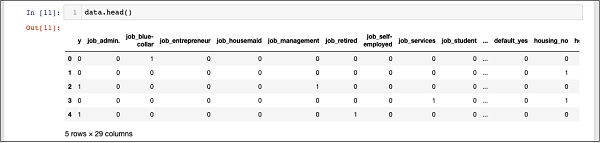

In [11]: data.head()

You will see the following output −

To understand the above data, we will list the column names by running the data.columns command as shown below −

In [12]: data.columns

Out[12]: Index(['y', 'job_admin.', 'job_blue-collar', 'job_entrepreneur',

'job_housemaid', 'job_management', 'job_retired', 'job_self-employed',

'job_services', 'job_student', 'job_technician', 'job_unemployed',

'job_unknown', 'marital_divorced', 'marital_married', 'marital_single',

'marital_unknown', 'default_no', 'default_unknown', 'default_yes',

'housing_no', 'housing_unknown', 'housing_yes', 'loan_no',

'loan_unknown', 'loan_yes', 'poutcome_failure', 'poutcome_nonexistent',

'poutcome_success'], dtype='object')

Now, we’ll explain how the get_dummies command performs one-hot encoding. In the newly generated database, the first column is the “y” field, which indicates whether the customer is subscribed to a TD. Now, let’s look at the encoded columns. The first encoded column is “job“. In the database, you’ll find that the “job” column has many possible values, such as “Administrator”, “Blue-Collar”, “Entrepreneur”, and so on. For each possible value, we created a new column in the database, prefixed with the column name.

Thus, we have columns named “job_admin”, “job_blue-collar”, and so on. For each encoded field in our original database, you’ll find a column list added to the created database with all the possible values for that column in the original database. Carefully examine the column list to understand how the data is mapped to the new database.

Understanding Data Mapping

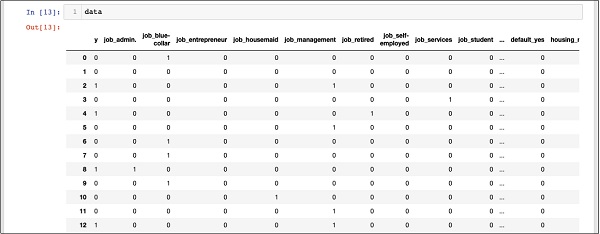

To understand the generated data, let’s print out the entire data using the data command. Part of the output after running this command is shown below.

In [13]: data

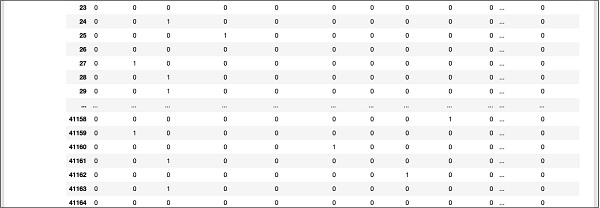

The above screen shows the first 12 rows. If you scroll further down, you will see that the mapping has been completed for all rows.

Here is a partial screen output further down the database for your quick reference.

To understand the mapped data, let’s examine the first row.

It says that this customer does not have a TD subscription, as indicated by the value of the “y” field. It also indicates that this customer is a “blue-collar” customer. Scrolling down horizontally will tell you that they have “housing” and no “loan.”

After this one hot encoding, we still need to do some data processing before we can start building our model.

Ditching “unknown”

If we check the columns in the mapped database, you will find that there are a few columns ending with “unknown”. For example, check the column at index 12 with the following command as shown in the screenshot –

In [14]: data.columns[12]

Out[14]: 'job_unknown'

This indicates that the job for the specified customer is unknown. Obviously, it does not make sense to include such a column in our analysis and model building. Therefore, all columns with “unknown” values should be removed. This can be done with the following command:

In [15]: data.drop(data.columns[[12, 16, 18, 21, 24]], axis=1, inplace=True)

Make sure you specify the correct column number. In case of doubt, you can always check the column name by specifying its index in the columns command, as described earlier.

After removing the unnecessary columns, you can check the final column list as shown in the output below –

In [16]: data.columns

Out[16]: Index(['y', 'job_admin.', 'job_blue-collar', 'job_entrepreneur',

'job_housemaid', 'job_management', 'job_retired', 'job_self-employed',

'job_services', 'job_student', 'job_technician', 'job_unemployed',

'marital_divorced', 'marital_married', 'marital_single', 'default_no',

'default_yes', 'housing_no', 'housing_yes', 'loan_no', 'loan_yes',

'poutcome_failure', 'poutcome_nonexistent', 'poutcome_success'],

dtype='object')

At this point, our data is ready for model building.