Python Deep Learning Artificial Neural Networks

Python Deep Learning Artificial Neural Networks

Artificial neural networks, or neural networks for short, are not a new idea. They have been around for about 80 years.

Deep neural networks only became popular in 2011 with the use of new technologies, the availability of huge datasets, and powerful computers.

A neural network mimics a neuron, which has dendrites, a nucleus, an axon, and a terminal axon.

For a network, we need two neurons. These neurons transmit information through synapses between the dendrites of one neuron and the terminal axon of the other neuron.

A possible model of an artificial neuron might look like this

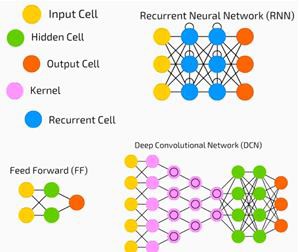

A neural network might look like the one shown below

The circles are neurons or nodes, and their functions on the data, and the lines/edges connecting them are the weights/information being passed.

Each column is a layer. The first layer of your data is the input layer. Then, all the layers between the input and output layers are hidden layers.

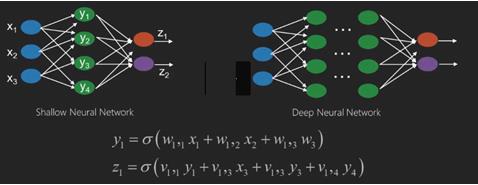

If you have one or a few hidden layers, then you have a shallow neural network. If you have many hidden layers, then you have a deep neural network.

In this model, you have input data, you weight it, and you pass it through a function in the neuron, which is called a threshold function or activation function.

Basically, it is the sum of all the values after comparing them to a certain value. If you fire a signal, then the result is (1), or if nothing fires, then it is (0). It is then weighted and passed to the next neuron, and the same function is run.

We can have a sigmoid function as an activation function.

As for the weights, they start out random and are unique for each input node/neuron.

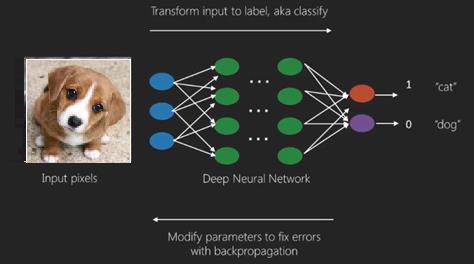

In a typical “feedforward” neural network, the most basic type of neural network, you pass your information directly through the network you’ve created, and then compare the output to the output of the sample data you want to use.

From here, you need to adjust the weights to help your output match your desired output.

Sending data directly through a neural network is called a feedforward neural network.

Our data goes sequentially from the input to the layers, and then to the output.

When we go backward and start adjusting the weights to minimize the loss/cost, this is called backpropagation.

This is an optimization problem. With neural networks, in practice, we have to deal with hundreds of thousands of variables, or millions, or even more.

The first solution is to use stochastic gradient descent as the optimization method. Now, there are options like AdaGrad and Adam Optimizer. Either way, this is a massive computational operation. This is why neural networks have been largely relegated to the sidelines for over half a century. Only recently have our machines had the power and architecture to consider these operations, along with appropriately sized datasets.

For simple classification tasks, neural network performance is relatively close to other simple algorithms like K-nearest neighbors. The true utility of neural networks is realized when we have larger data sets and more complex problems, where they outperform other machine learning models in both areas.